Note: The images used below are for representational purposes only

Qure.ai is the world’s leading developers of Artificial Intelligence solutions for medical imaging and radiology applications. Pioneers in the field, they are amongst the most published AI research groups in the world having more than 30 publications and presentations in leading journals and conferences, including the first paper on AI in the prestigious journal – The Lancet.

With tens of thousands of chest X-rays passing through the Qure.ai algorithms every day, it becomes critical for the Qure data science leadership to know the real-time performance of their algorithms across their user base. It is a well known fact that the performance of AI can vary dramatically based on patient ethnicity and equipment vendor characteristics – as an AI developer’s user-base scales, the likelihood of an error creeping through the system increases. The challenge becomes the orchestration of a mechanism where randomly picked Chest X-rays are double-read by a team of radiologists, labels established during these reads are compared against the AI outputs, and a dashboard is presented which contains real-time performance metrics (Area Under Curve, Sensitivity, Specificity etc.) with the ability to deep dive into the false positives / negatives.

How does the leadership team at Qure.ai create such a system without investing significant engineering effort?

CARPL’s Real-Time AI validation workflow allows AI developers to monitor the performance of their algorithms in real-time. Reshma Suresh, the Chief Operating Officer at Qure.ai, uses CARPL to get real-time ground truth inputs from radiologists and then compares the radiologist reads to the AI outputs, subsequently creating a real-time performance dashboard for QXR – Qure.ai’s Chest X-Ray AI algorithm.

CARPL makes the process very easy:

CARPL is deployed on Qure.ai’s infrastructure allowing Qure to take control of all the data that comes onto CARPL!

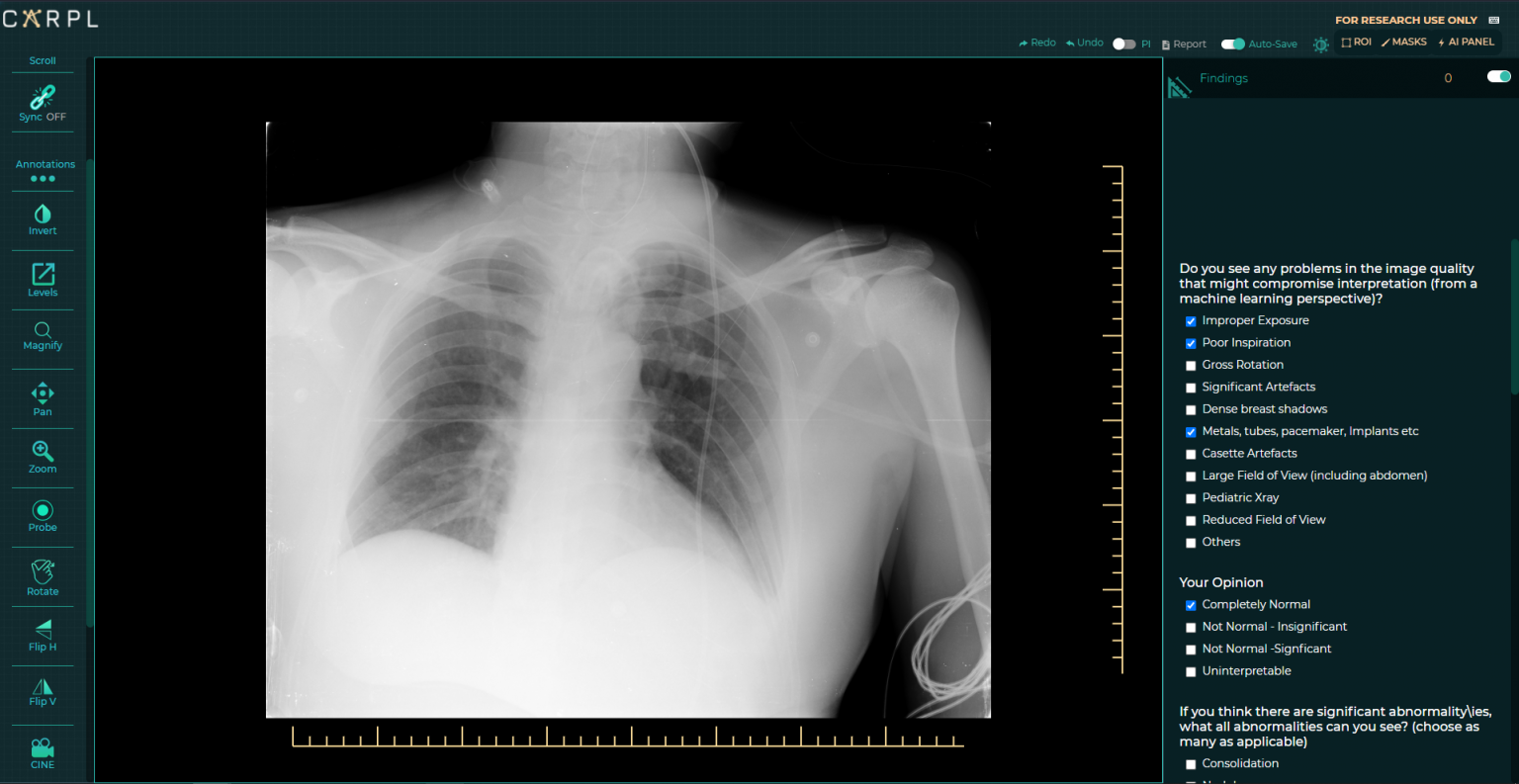

Example of a case which is otherwise normal, but was wrongly classified by AI as an abnormal case possibly due to poor image quality and a coiled naso-gastric tube in the eosophagus

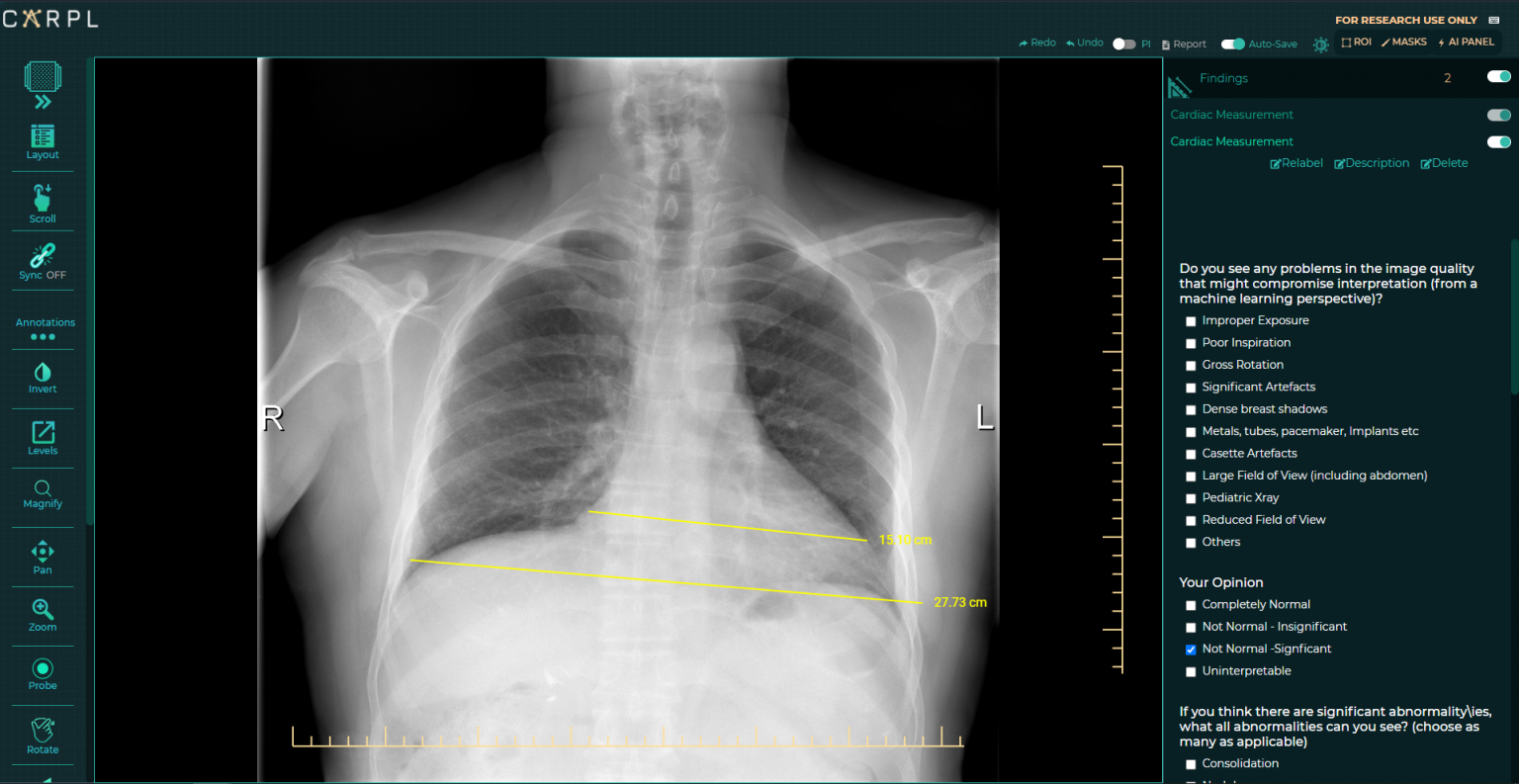

Example of a case where the radiologist identified cardiomegaly

Representational Image of a Real-Time Validation Project on CARPL

While much has been spoken about monitoring of AI in clinical practice at the hospital level, it is even more important for AI developers themselves to monitor AI in real-time so that they may detect shifts in model performance, and intermediate as and when needed. This moves the process of AI monitoring and consequent improvement from a retrospective and post-facto process to a proactive approach. As we go and build on our vision to make CARPL the singular platform behind all clinically useful and successful AI, working with AI developers on helping them establish robust and seamless processes for monitoring of AI is key.

Anonymised chart to show a fall in AUC detected by real-time monitoring of AI by an AI developer – image for representational purposes only – not reflective of real world data.

We stay true to our mission of Bringing AI from Bench to Clinic.